Technical Background¶

This page includes some references about Bayes’ Theorem and Bayesian inference and discusses the particulars of the implementation of these ideas within bayesim.

Bayes’ Theorem¶

There are a plethora of great explanations of Bayes’ Theorem out there already, so I won’t go through all the bayesics here but instead refer you to one of those linked above or any number of others you can find online or in a textbook.

Assuming you understand Bayes’ Theorem to your own satisfaction at this point, let’s remind ourselves of some terminology.

The \(\color{firebrick}{\mathbf{\text{posterior probability}}}\) of our hypothesis \(H\) given observed evidence \(E\) is the result of a Bayesian update to the \(\color{darkorange}{\mathbf{\text{prior}}}\) estimate of the probability of \(H\) given the \(\color{darkmagenta}{\mathbf{\text{likelihood}}}\) of observing \(E\) in a world where \(H\) is true and the probability of observing our \(\color{teal}{\mathbf{\text{evidence}}}\) in the first place.

Bayesian Inference and Parameter Estimation¶

Note

I haven’t found an online explanation of this material at a not-excessively-mathy level (I firmly believe that you don’t need a lot of knowledge of mathematical terminology to understand this; it can really be done in a very visual way) so I wrote my own. If you know of another, please send it to me and I’d be happy to link to it here!

Update!! I found some nice explanations/examples in this repo! Check them out for some more material in addition to what I’ve included here.

Most of the examples used to explain Bayes’ Theorem have two hypotheses to disginguish between (e.g. “is it raining?”: yes or no). However, to use Bayes’ Theorem for parameter estimation, which is the problem of interest here, we need to generalize to many more than two hypotheses, and those hypotheses may be about the values of multiple different parameters. In addition, we would like to incorporate many pieces of evidence, necessitating many iterations of the Bayesian calculation. These and other factors can make it confusing to conceptualize how to generalize the types of computations we do to estimate the probability of the answer to a yes-or-no question or a dice roll to a problem statement relevant to a more general scientific/modeling inquiry. I will walk through these factors here.

Many hypotheses, in multiple dimensions¶

The first step is let our hypotheses \(H\) range over more than two values. That is, rather than having \(H_1\) = “yes, it is raining” and \(H_2\) = “no, it is not raining”, we would instead have something like \(H_1\) = “the value of parameter \(A\) is \(a_1\)”, \(H_2\) = “the value of parameter \(A\) is \(a_2\)”, etc. for as many \(a_n\) as we wanted to consider. While we could then in principle enumerate many different statements of Bayes’ Theorem of the form

this is quite cumbersome and so instead we will write

with the understanding that this probability is not a single value but rather a function over all possible values of \(A\). This also allows the number of equations we have to write not to explode when we want to add another fitting parameter, and instead the probability function just to be defined over an additional dimension:

Iterative Bayesian updates¶

The next step is to reframe Bayes’ Theorem as an explicitly iterative procedure. Imagine we’ve incorporated one piece of evidence \(E_1\), resulting in a posterior probability \(P(H|E_1)\). To update our posterior again given further observation \(E_2\), we simply let this posterior become our new prior:

Hopefully now it’s easy to see that for n pieces of evidence, we can say that

Where does the likelihood come from?!? Data modeling!¶

At this point, it would be natural to say “Sure, that math all makes sense, but how do I actually know what that likelihood \(P(E|H)\) is?!?”

This is where having a model of our experimental observations comes in. This model could take many forms - it might be a simple analytical equation, or it might be a sophisticated numerical solver. The key traits are that it can accurately predict the outcome of a measurement on your system as as function of all relevant experimental conditions as well as fitting parameters of interest.

More specifically, suppose your measurement yields some output variable \(O\) as a function of various experimental conditions {\(C\)}. Then your evidence looks like

Suppose also that you have a set of model parameters {\(P\)} that you wish to know the values of. That means that your posterior distribution after \(m\) observations will look something like

where the hypotheses are sets of values of the parameters {\(P\)}, i.e., points in the fitting parameter space. Then your model must take the form

Given an observation \(O_m\) at conditions {\(C_1^m,C_2^m,...C_n^m\)} (where the \(m\) superscript indicates specific values of the conditions rather than their full ranges), we can compute the likelihood over all parameters {\(P\)} by evaluating our model for these conditions {\(C^m\)} and comparing the simulated outputs {\(M(\{P\},\{C^m\})\)} to the measured output \(O_m\). But then how do we know what probabilities to assign as a function of how much the measured and simulated outputs differ? Glad you asked…

Experimental Uncertainty¶

Our experimental measurement \(O_m\) will have some associated uncertainty \(\Delta O\), generally a known property of our equipment/measurement technique. Quantifying this uncertainty is key to understanding how to calculate likelihoods. Specifically, we need to introduce an error model. We’ll use a Gaussian distribution, a very common pattern for experimental errors in all kinds of measurements:

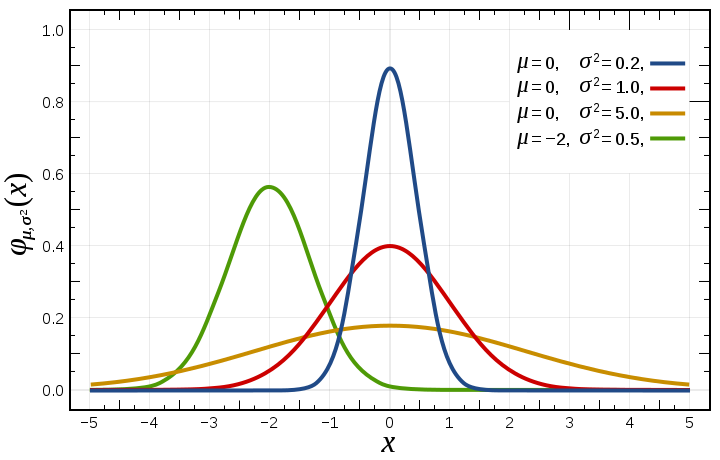

where \(\mu\) is the mean, \(\sigma\) is the standard deviation, and the term in front of the exponential is just a normalizing constant (to make sure that the probability distribution integrates to 1). The distribution looks like this:

You can see the impact of the two parameters - a larger \(\sigma\) value makes the distribution wider, while \(\mu\) simply shifts the center. (Image from Wikipedia.)

What this means for our example is that our measurement of some value \(O_m^0\) for our output parameter \(O\) is converted to a distribution of possible “true” values for \(O_m\):

(I’m leaving off the normalization constant for convenience.)

Model Uncertainty¶

Of course, when our model function isn’t a simple analytical equation but rather a numerical solver of some sort, we can’t evaluate it on a continuous parameter space but we instead have to discretize the space into a grid and choose points on that grid at which to simulate. This introduces a so-called “model uncertainty” proportional to the magnitude of the variation in the model output as one moves around the fitting parameter space. This model uncertainty is calculated in bayesim at each experimental condition for each point in the parameter space as the largest change in model output from that point to any of the immediately adjacent points.

Then, when we compute likelihoods, we use the sum of these two uncertainties as the standard deviation of our Gaussian.

Especially if the parameter space grid is coarse, incorporating this model uncertainty is critical - if the variation in output variable from one grid point to another is significantly larger than the experimental uncertainty but this uncertainty is used as the standard deviation, it is possible that likelihood could be computed as zero everywhere in the parameter space, just because the measured output corresponded to parameters between several of the chosen sample points. And that wouldn’t be very good.

An illustrative example: Kinematics¶

This probably all seems a bit abstract at this point, so illustrate how we do this in practice, let’s use a simple example. Suppose we want to estimate the value of \(g\), the acceleration due to gravity near Earth’s surface, and \(v_0\), the initial velocity of a vertically launched projectile (e.g. a ball tossed straight up), based on some measured data about the trajectory of the ball. We know from basic kinematics that the height of the ball as a function of time should obey (assuming that the projectile’s initial height is defined as 0)

This function represents our model of the data we will measure and we can equivalently write

where we’ve now explicitly delineated our parameters \(g\) and \(v_0\) and our measurement condition \(t\).

Now let’s suppose we make a measurement after 2 seconds of flight and find that \(y(2)=3\), with an uncertainty in the measurement of 0.2. Recalling our Gaussian error model from above, we can write

(Assume model uncertainty is negligible.) But what we really want is a probability distribution over our parameters, not over the measurement value itself. Fortunately, our model function lets us do just that! We can translate our distribution over possible measured values into one over possible parameter values using the model function:

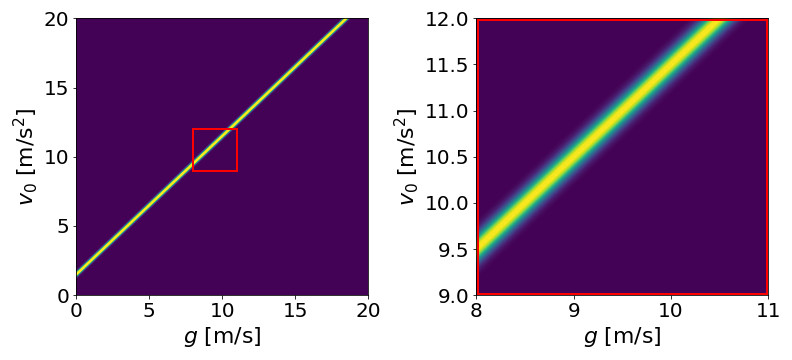

Now we can visualize what that distribution looks like in “\(v_0\)-\(g\)” space:

On the left, the probability distribution over a wide range of possible values. On the right, zoomed in to near the true value of \(g\) to show Gaussian spread.

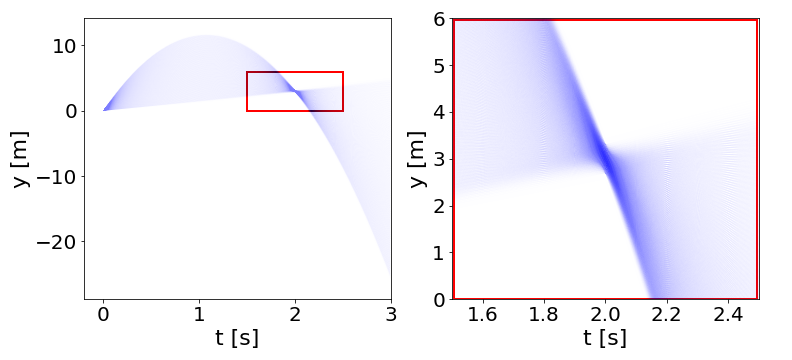

Another way we might want to visualize would be in the space of what the actual trajectories look like:

On the left, \(y(t)\) trajectories from \(t=0\) to \(t=3\). On the right, zooming in on the region indicated to see spread around y(2)=3.

So we can see that what the inference step did was essentially “pin” the trajectories to go through (or close to) the measured point at (t,*y*)=(2.0,3.0).

Now let’s suppose we take another measurement, a short time later: y(2.3)=0.1, but with a larger uncertainty, this time of 0.5. Now we return to Bayes’ Theorem - our prior distribution will be the conditional distribution from Equation (15) above, and the likelihood will be a new conditional distribution generated in exactly the same way but for this new data point. What does the posterior look like?

(Note that the axis limits are smaller than above)

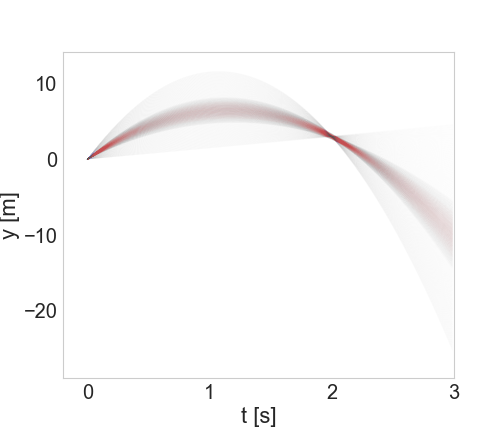

As we would expect, we’re starting to zero in on a smaller region. And how about the trajectories?

Newly refined set of trajectories shown in red, overlaid on (paler) larger set from the previous step.

As expected, we’ve further winnowed down the possible trajectories. If we continued this process for more and more measurements, eventually zeroing in on the correct values with greater and greater precision.

To see this example as implemented in bayesim, check out the Examples page!